I spoke to smart glasses powered by Google’s Gemini AI, and they told me what I was seeing. The AI was able to summarize the page of a book I was reading and identify the location in a YouTube video I was watching. The experience felt like an emerging future where information is easier to access than ever. Right now we may be talking to AI on our phones and PCs, but Google wants it to live on our faces, too.

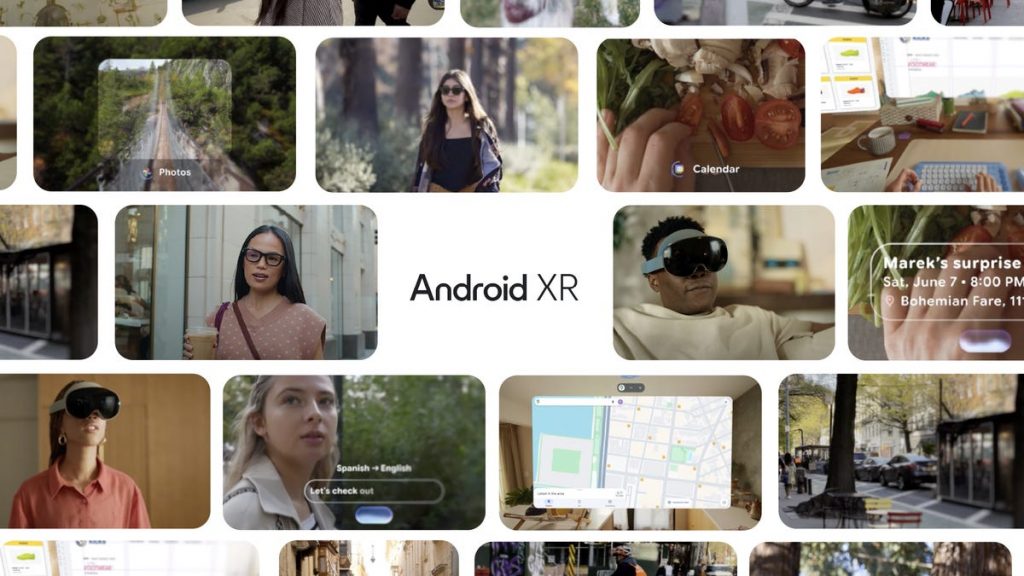

I first tried out Google’s new Android XR system last December, and now the company is ready to spill more details. Google already told us last year that glasses are a major part of Google’s future product plans. Now, In advance of the Google I/O developer conference, I had an exclusive conversation with Shahram Izadi, Google’s VP and GM for Android XR, about what to expect next. It starts with a Samsung mixed-reality headset later this year, and continues with lots of glasses after that — including from Warby Parker.

Smart glasses are being increasingly discussed among several big tech giants — Apple, Meta, and Google — as the next frontier for AI and computing, despite sales of these devices still being far lower than phones. Glasses that can see everything you see, analyze the visual data and communicate with you using AI could be the next big tech gadget. But we’re not there yet.

Google’s approach with Android XR devices today is to augment existing phones, not replace them. «I know some people think about glasses one day replacing smartphones,» says Izadi. «I think it will be this growing ecosystem approach. But we do feel that XR is going to be the next frontier for Gemini, and for AI.»

Google’s new vision for glasses: AI with senses

With Android XR, Google is getting back into the glasses game again. After taking a break from VR and AR for years — Google Glass arrived back in 2013 and Daydream VR headsets debuted in 2016 — the search engine giant is facing hefty competition today.

Meta, the parent company of Facebook, has a head start in XR (the industry’s term for AR, VR and AI-connected headsets and glasses) with its Quest products and AI-infused Meta Ray-Bans. The latter featured in ads, starring Chris Pratt and Chris Hemsworth, that debuted during the Super Bowl. And Apple already has its expensive Vision Pro, with reports of a push to glasses in the next few years.

Google’s return to the landscape will start with a mixed-reality headset, co-developed with Samsung called Project Moohan. But after that, it looks like it’s all glasses, with a series of steps on the road to true AR that floats displays in front of your eyes.

Izadi feels glasses are the logical next step for AI because the hardware is more sensor-filled, contextual and hands-free, and that AI agents can fit in easily there. «Those AI assistants have additional contextual information from these XR devices. They can understand you and your setting in richer ways.»

Google glasses guided by Gemini, connecting to Google apps

First, is Google’s making a developer-focused pair of AR glasses manufactured by Xreal, called Project Aura. Then, there will be AI-infused glasses that are coming from eyewear partners. And at the same time, Samsung will be working with Google on a reference design for next-generation AR glasses.

The common thread in all of these products is Gemini AI.

Android XR will also connect with the Google Play app store, similar to how Apple’s Vision Pro can connect to Apple’s iOS App Store. For glasses, however, that bridge is going to take a while longer to build. Izadi says that Project Aura is the start of working on Google Play support for glasses in AR, while AI-assisted glasses like Meta Ray-Bans will lean on Gemini to hook into information on your phone, in versions with and without displays.

Project Aura: Xreal-made glasses that will start to explore AR

The first step, via a partnership with Xreal, is a tethered pair of glasses that won’t be all-day wearables, but will extend apps and experiences onto displays. According to Izadi, they will have a 70-degree field of view. That matches the field of view of Meta’s Project Orion smartglasses I tried out last year, but it doesn’t sound like Project Aura will have the same transparent lenses or wireless features. The glasses will have two cameras onboard to allow motion tracking and possibly hand tracking, similar to Xreal’s existing Air 2 Ultra glasses.

Xreal, a manufacturer of tethered (wired) display glasses for years, has already been making headway towards things approaching AR. Its latest glasses, the Xreal One, can already extend displays a bit with connected laptops, while Xreal’s recent partnership with Spacetop on Windows laptops goes further by floating many apps and windows at once. Google and Xreal’s partnership could do something similar with Google Play apps in-glasses.

«Lightweight, portable XR devices represent a major evolution from bulkier headsets. We’ve had devices like this as our north star for years. And every time we’ve iterated on our technology, we’ve moved closer to what Project Aura represents today,» says Chi Xu, Xreal’s founder and CEO. I tried Xreal’s first glasses way back, called Nreal Light, and they were aiming to be Magic Leap-like AR glasses. Aura, in a sense, is coming full circle.

Izadi calls Xreal’s product a «plug and play» device, using a tethered cable similar to how Xreal’s glasses currently work. It’ll be a developer kit to start, working off a processing puck with a processor made by Qualcomm, with a goal eventually of connecting with other future devices like phones. «We have plans to do a pluggable device in the future. And that’s where there could be interesting collaborations with Samsung,» he told me.

The model for how these glasses will work reminds me a lot of what Qualcomm was working on as a plan to connect phones with AR glasses years ago. Hugo Swart, who used to be Qualcomm’s head of XR, is now senior director of XR ecosystems for Google.

AI glasses coming from Warby Parker, others, to compete with Meta Ray-Bans

Google’s also been working on its own internal AI-enabled glasses — ones I tried last year, and that are also being demoed at Google I/O. The company also has plans for the first wave of AI glasses you can actually buy, which will compete with Meta Ray-Bans. Google’s starting with some big eyewear partners: Warby Parker plus Korean fashion brand Gentle Monster and luxury eyewear manufacturer Kering Eyewear.

Izadi didn’t say exactly when these glasses will be available, or how much they’ll cost, but the eyewear partnerships are part of accelerating their arrival. «We are working as hard as we can to make AI glasses a reality,» says Izadi. «We have to build that entire pipeline. When you buy eyewear, especially if it has prescription lenses, you need to go to a store, you need to try the glasses on, you need to get your prescriptions measured. Those partnerships are really critical for that.»

Some of Google’s planned AI features for glasses sound similar to Meta’s Ray-Bans: music playback, sending messages, describing what the cameras see and live translation. But Google’s also exploring more complex features, such as checking calendars and appointments or controlling information on your phone. What interests me about Google’s approach here, compared to Meta, is how much more integrated the AI features could be to things on our phones.

Assistive features are also a big part of the equation. I already know people who use Ray-Bans to help assist their vision, while Apple’s AirPods Pro 2 have hearing aid functions. Izadi agrees that a major goal is to have these glasses work to assist for hearing and vision loss, and in other assistive capacities.

It doesn’t sound like those glasses are coming this year, but they could very well be coming in 2026.

Samsung and Google are also building AR glasses

Samsung is the biggest and most mysterious part of the equation. Google’s using Samsung to build its first Android XR device, Project Moohan, which is coming out sometime later this year. That headset, which I’ve already demoed and which Google is also showing off at I/O, is much like Apple’s Vision Pro in spirit, running 2D Google Play apps, 3D apps and Gemini Live AI that can recognize what’s on screen.

But Samsung’s also building out the hardware and software for true AR glasses along with Google, a step that Izadi sees as crucial for finalizing the vision: «Samsung and Google will be working together on the core platform, which includes reference hardware,» he told me. Those steps look even further off, though. In the meantime, the larger Project Moohan looks to be the way to explore AR-like experiences, while first steps for glasses are worked on with Project Aura and AI glasses start arriving in stores even sooner.

AI, AI and more AI

While all these glasses and headsets may sound like a lot of moving pieces, there’s a clear throughline: Gemini. Google is focused on making its AI work with cameras on wearable devices. As those AI tools evolve, the rest of the AR glasses picture may evolve alongside them.

Right now, Google has to play catch-up. Moohan looks promising in terms of showing off how AI can mix with VR and AR, but the price of that headset may end up being as distancing for most would-be buyers as the $3,500 Vision Pro. As for Project Aura, Xreal says it will get into more details at an AR-focused AWE conference in June.

Izadi acknowledges that some of these early glasses will have tradeoffs compared to the company’s end goals for full AR glasses, but he sees a full product lineup — ranging in price, features, size and processing power — as the right path.

«We have this spectrum: from video see-through headsets, to optical see-through headsets that are wide field-of-view, to Xreal-like devices, to truly AR-like glasses, to AI glasses which are more heads up or even display-less,» says Izadi. Google looks to be spreading its bets as the capabilities of AR glasses are still being developed.

Of all of these upcoming products, the AI-enabled glasses look like the winning bet to me. Google is focusing on AI’s abilities now, and building out the rest of the augmented reality ideas for a future to come.