As we count down toward the final days of 2023, there are two ways to sum up the year in AI: Generative AI is good for humanity, and generative AI is bad for humanity.

As usual, the truth lies somewhere in between. (I won’t say middle because the opportunities and risks are still unfolding.) But I was happy to hear a hopeful take from actor Jack Black. Reflecting on his turn in The Super Mario Bros. Movie earlier this year and noting that School of Rock just turned 20 (wow, right?), Black said he doesn’t think we’re headed for a Terminator world in the future.

«It’s so new that it’s hard to really say what the future holds, but I don’t have all doom and gloom. I don’t feel like, ‘Oh no, it’s going to be like Terminator where it comes and destroys all the human jobs.’ I’m not convinced about that because I can admit, I don’t know, and I’m hoping that it’s going to be a great new world and that it’s going to be a tool that all of us can use to make ourselves better and make the world better,» Black said in an interview with The Hollywood Reporter.

«How about it saves the world? How about that possibility? I [have] huge hope, because we could use some saving. Maybe it’ll cure cancer. Maybe it’ll perfect space travel. Maybe it’ll do some things that we can’t even imagine that are great.»

Maybe, Jack Black. Maybe.

He’s not the only one with an optimistic outlook. In 2023, investors poured nearly $10 billion into gen AI startups, more than double the $4.4 billion invested the year before, according to GlobalData. Why is that notable? Because overall venture capital money put in tech startups fell year over, to $224 billion in 2023 from $418 billion in 2022.

«One reason to bet big on gen AI is the general-purpose nature of the technology, which startups are using to solve diverse challenges,» said Adarsh Jain, director of the Financial Markets team at GlobalData. He cited examples of startups using AI to help tackle language translation, design and engineer proteins, work on weather and climate solutions, and build new conversational search services. «Gen AI is one of the few technology innovations to have impacted the broad spectrum of industries in such a short time.»

Here are the other doings in AI worth your attention.

Musk’s Grok AI beta released, expect ‘many issues at first’

There’s a saying in tech that when a product is free, you — the user — are the product because the company is using you as its beta tester while collecting information about your usage and data to potentially sell to advertisers and marketers.

As usual, billionaire entrepreneur/bloviator Elon Musk is doing things a different way. He’s just released his Grok AI in beta to subscribers who pay $16 a month for his X Premium+ service in the US. «There will be many issues at first, but expect rapid improvement almost every day,» Musk said in a Dec. 8 post on X.

Grok, announced Nov. 4, is Musk’s rival to OpenAI’s ChatGPT and other conversational chatbots, including Microsoft Bing and Anthropic’s Claude.ai. Modeled on The Hitchhiker’s Guide to the Galaxy, Grok «is designed to answer questions with a bit of wit and has a rebellious streak, so please don’t use it if you hate humor!» the company behind Grok, xAI, said.

The plan was to roll out Grok in the US over a week, available first to people who’ve been paying for X (formerly Twitter) the longest. «the longer you’ve been a subscriber, the sooner you can grok,» the company said in a post. «Don’t forget your towel!»

Grok is the first product from xAI, a company whose mission is to «understand the true nature of the universe.» As for the towel reference, tech insider site Mashable offers this recap:

«Grok takes its name from the 1961 sci-fi novel Stranger in a Strange Land by Robert Heinlein. In it, the term «grok» is a Martian word that means «to drink,» as well as «to understand» or «to comprehend. Twitter/X’s towel reference is a nod to Douglas Adams’ The Hitchhiker’s Guide to the Galaxy franchise, in which towels feature prominently as an important tool for travelers exploring the universe.»

Choosing holiday gifts, with an AI assist

Many of us are in the last week of holiday shopping. If you’re still trying to figure out what to buy for family or friends, AI, as CNET’s Corrinne Reichert found, is great at generating lists. So she used ChatGPT, Microsoft Bing and Google Bard to help her with gift ideas for her family.

«The secret lies in giving [the AI a prompt with descriptors and hints,» Reichert found. But she also found that being too specific can be limiting. «I gave as many details as I could think of about each person’s hobbies, interests and likes, down to how many children or grandchildren they had and their ages. The first person I sought a gift suggestion for was my husband, and among the eight likes I listed, I mentioned Marvel movies. This turned out to be a mistake; almost every single one of the AI’s recommendations pointed to Marvel merchandise.»

While you may applaud the Marvel suggestions, Reichert said the goal is to refine your prompts as you get recommendations that are going off in the wrong direction. The other thing to know: many of the AIs don’t include the cost of an item or tell you where you can buy it.

The bottom line, though, is that spending a few minutes with the AI on gift suggestions «will give you a decent list of gift suggestions as a starting point. But you’ll then have to search for them yourself on Amazon, Etsy or other stores to actually find, examine and buy them,» she said. «It’s nothing groundbreaking, and these are things I might’ve thought of anyway, but AI takes the writer’s block out of the list-making process.»

Meta’s Ray-Ban glasses get AI boost to see what you’re seeing

Meta is trying out new AI capabilities for its second-generation Ray-Ban glasses, and CNET’s Scott Klein got to test them out. Basically, Meta has outfitted a pair of Ray-Bans with on-glasses cameras and audio sensors that allow them to look at images and then tell you how they’re interpreting the images using gen AI tech. The tech is called «multimodal AI» because it uses camera and voice chat together.

Stein did a test to see if the $300 glasses could tell him which among some tea packets were caffeine-free. «I was staring at the glasses and looked at a table with four packets, their caffeine labels blacked out with a marker. A little click sound in my ears was followed by Meta’s AI voice telling me that the chamomile tea was likely caffeine-free. It was reading the labels and making judgments using generative AI.»

The feature is limited right now, Stein said: It can only recognize what you see by snapping a photo, which the AI then analyzes. «You can hear the shutter snap after making a voice request, and there’s a pause of a few seconds before a response comes in. The voice prompts are also wordy: Every voice request on the Meta glasses needs to start with ‘Hey, Meta,’ and then you need to follow it with ‘look and’ (which I originally thought needed to be ‘Hey, Meta, look at this’) to trigger the photo-taking, immediately followed with whatever you want the AI to do. ‘Hey, Meta, look and tell me a recipe with these ingredients.’ ‘Hey, Meta, look and make a funny caption.’ ‘Hey, Meta, look and tell me what plant this is.'»

Still, Stein’s TL;DR is that possibilities for how tech, currently in beta, are «wild and fascinating.» And unlike the buzz being generated by a pin-size, $699 AI wearable from Humane that’s coming in 2024 (and will require a $24 per month subscription fee), Meta’s glasses are available today for you to try out.

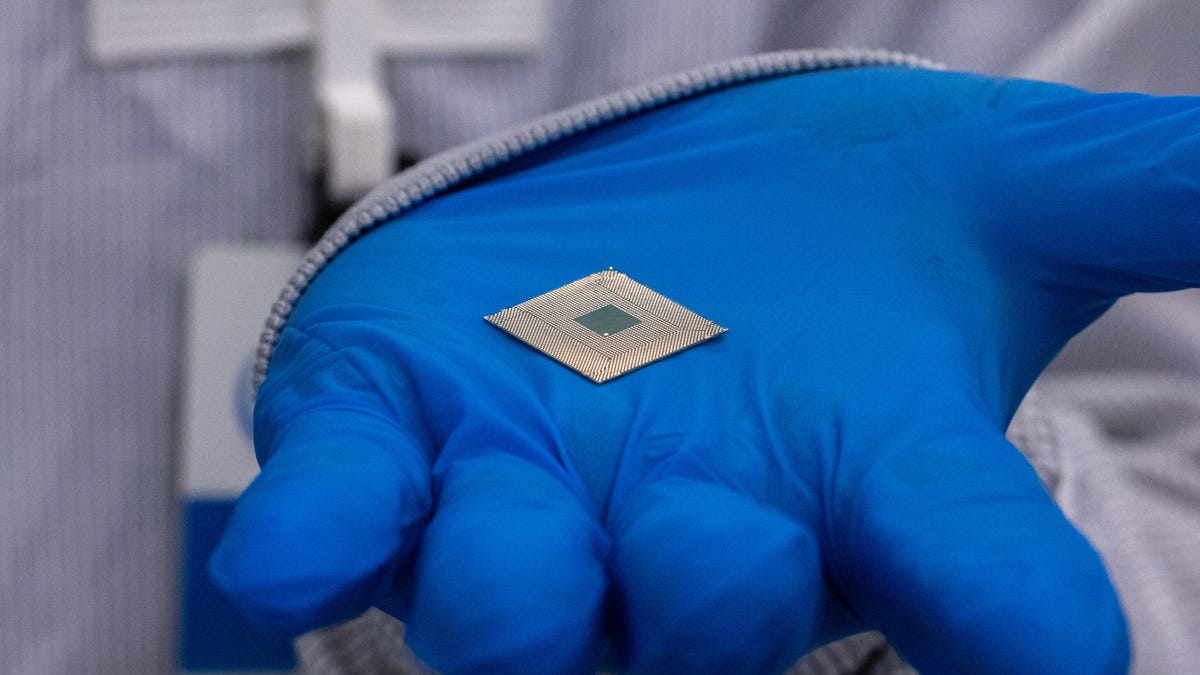

Intel’s building AI chips – designed by AI

AI chatbots aren’t just powered by large language models (LLMs). You also need hardware — the processors that underpin the compute power that handles all the data processing needed to deliver results in a conversational format.

CNET’s Stephen Shankland took a deep dive into how some of the world’s most important processor makers — including Intel and Nvidia — are now using AI to «help accelerate the design of those very processors.»

«AI tools catch bugs early in the design process so chips come to market faster, and they oversee manufacturing so more of the tiny slices of silicon that Intel makes end up inside products instead of in the trash can,» reports Shankland. «For Meteor Lake, Intel’s first major processor made of multiple «chiplets» stacked into one package, the AI tools help find the best way to sell each processor given a multitude of slight differences in each processor’s components. »

A little bit on the nerdy side, yes, but helpful in understanding what goes into these chips, which in turn are bringing AI capabilities to your laptop. Happy reading.

OpenAI signs another copyright deal with a major publisher

OpenAI made news this summer after signing a deal with the Associated Press to license its news archive back to 1985. It also told publishers that they could opt out of having their internet content scraped and used to help train the LLM behind OpenAI’s ChatGPT.

This was notable news because authors, publishers and other copyright holders aren’t happy with gen AI companies essentially «stealing» their copyrighted material without their knowledge, permission or compensation.

This week, OpenAI announced it signed another content licensing deal for news summaries with Axel Springer, which owns Politico and Business Insider. The goal: to «deepen beneficial use of AI in journalism.» As with the AP deal, financial terms weren’t disclosed.

But the two companies did make a nod to the fact that the deal is intended to create «new financial opportunities that support a sustainable future for journalism.» OpenAI gets «recent and authoritative content on a wide very of topics,» the companies said, while Axel Springer’s media brands get a new distribution channel. «ChatGPT’s answers to user queries will include attribution and links to the full articles for transparency and further information.»

Publishers, including The New York Times, have reportedly been in talks with OpenAI after telling the company they don’t want their copyrighted content scraped and used without their permission.

Spotify cuts jobs as part of its push for ‘efficiency’ with AI

While some economists say the gen AI revolution will spur job growth as new kinds of roles are invented (while acknowledging that current jobs will also be replaced due to the tech), companies are already starting to rethink their labor force as they look to boost productivity and profits with AI in 2024.

This week, financial analysts called out the 1,500 jobs cut at audio streaming service Spotify, which is looking to AI tech to improve business efficiency — and, analysts say, boost profit. «Spotify is leveraging AI across its platform, launching AI DJ, simulating a traditional radio experience, in 50 additional markets and rolling out AI Voice Translation for podcasts,» Justin Patterson, equity research analyst at KeyBanc Capital Markets, wrote in a research note cited by CNN. «Coupled with audiobooks rolling out to Premium Subscribers, we believe Spotify has several opportunities to drive engagement and eventually stronger monetization.»

When asked for comment, Spotify referred CNN to the comments made by CEO Danniel Ek in its October earnings call, where the word efficiency, noted CNN, was used more than 20 times.»

«The primary way you should think about these [AI] initiatives, [is] it does create greater engagement and that greater engagement means we reduce [subscriber] churn,» EK said during Spotify’s earnings call. «Greater engagement also means we produce more value for consumers. And that value to price ratio is what then allows us to raise prices like we did this past quarter with great success.»

Term of the week: Prompt engineering, redux

Prompt engineering, as I explained back in August, is a new job that’s emerged in the past year as AI chatbots have taken hold. The job starts with being able to ask the right questions, with your questions known as prompts. If your prompts aren’t great, chances are the answers you get back won’t be either — or that you’ll find the whole conversation frustrating. In tech talk, that goes back to a concept known as GIGO — garbage in, garbage out. That’s why prompt engineering has been called a key job of the future.

And the jobs can come with salaries as high as $375,000 and don’t always require a tech degree, according to some reports, as companies work to build up a library of prompts.

I expect the role of the prompt engineer will evolve over time (as will the salary ranges). So I asked Raphael Ouzan, founder and CEO of A.Team, which oversees a network with over 9,000 freelance tech workers, to define prompt engineering. As expected, there’s not a simple job description.

«Prompt engineering is used incredibly broadly and is one of the misunderstood terms in AI,» Ouzan said in an email exchange. Instead, he said, we should think about it at three levels:

Prompt Writer: You know how to craft prompts to get the output you want from AI tools. When most people say «Prompt Engineering,» they’re really just talking about Prompt Writing.

Prompt Designer: The next level is someone who can solve business problems through prompts that return the answer you need, and can be integrated into real-life workflows.

Prompt Engineer: Someone who knows how to use prompts to build systems and integrate them together. A true prompt engineer thinks and works like an engineer.

Editors’ note: CNET is using an AI engine to help create some stories. For more, see this post.